I’m lying in my bed, scrolling through endless reels. Actress, producer, and president of the Screen Actors Guild–American Federation of Television and Radio Artists (SAG-AFTRA) Fran Drescher and Hollywood’s Writers Guild of America are on strike for better working conditions and to protect screenwriters from being reduced to merely editing AI-generated film scripts in the future. Another white dude with a podcast tells me how I can finally ask ChatGPT the right questions. Between meal-prepping videos and other life hacks, ads keep trying to sell me the six, three, or ten most important AI tools. So many ways to maximize my efficiency today. I would probably be most efficient if I would just put my phone down and get up, but the endless, algorithmically curated feeds in my social media apps won’t let me go. Where have the last two hours gone again?

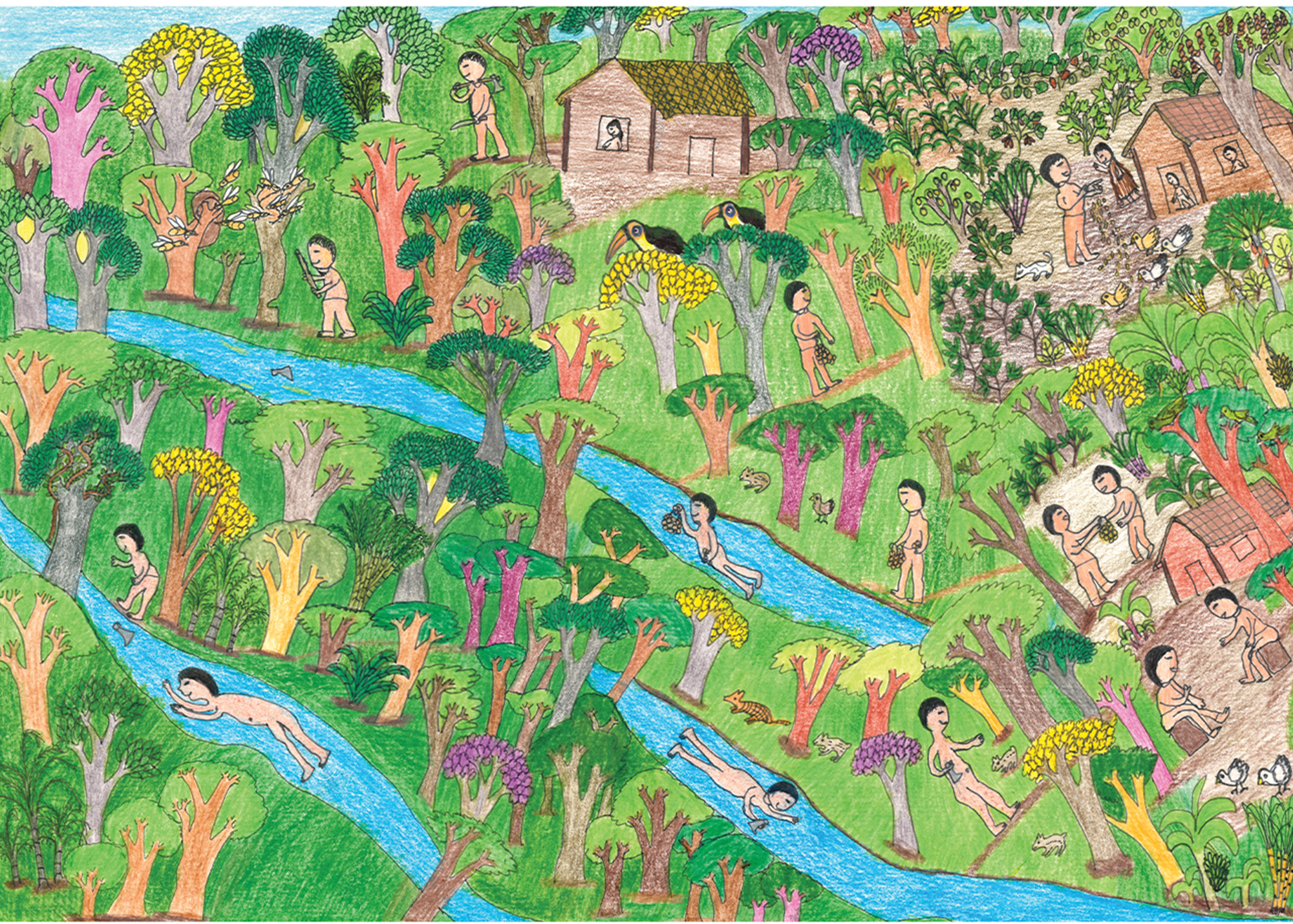

Artificial intelligence and the commodification of time are closely linked. Tech companies sell AI as the future; they sell machine-generated efficiency and, thus, the promise of saving labor and time. Narratives of singularity (artificial intelligence surpassing human intelligence) and transhumanism (fusion of humans and machines) often depicted in science fiction become anchored as seemingly immovable futures and gratefully taken up in Silicon Valley as inspiration. Smartwatches, self-driving cars, virtual reality (VR) glasses, and robots first existed as fabulations in books and movies before being turned into reality by tech companies. These enterprises thrive on tech determinism: the idea that social change is brought about solely by technology, and that society is constantly evolving in a linear process through technology—which is then called “progress.” Yet, the concept of time has not always and everywhere followed a linear understanding. The ancient Greeks discussed many different understandings of time, and Indigenous civilizations like the Maya and Inca narrated cyclical mythologies.

The idea of a linear time in which society is constantly improving through technological progress is a fundamental driver for investments in automation. However, it should be noted that automation will only be implemented if it is financially viable. This is demonstrated by sociologist and author Peter Frase in his book Four Futures. Frase describes how stricter controls between the Mexican and US border since 2015 led to a decline in the availability of undocumented Mexican workers on Californian plantations. As a response, US farmers invested in new machinery that replaced human labor. This substitution relationship between migrant workforces and robotization can be seen in other geographical contexts as well. A Danish survey from 2024 predicts that restrictive immigration policies will lead to an increase in robot adoption. While this development will put low-skilled workers in competition with robots and lead to negative wage effects, there is also a recurring capitalist dynamic, as Frase describes: “The more powerful and better-paid workers become, the greater the pressure on capitalists to automate.”

“OpenAI is making billions in new investments, while clickworkers are experiencing psychological harm and potentially long-term consequences to their mental health.”

The relationship between low-cost labor and automation reveals that AI’s much-promised efficiency mainly benefits those who are already affluent. It is reflected in agriculture, Tesla assembly lines, and Amazon’s warehouses. ChatGPT also shows how unjust social structures are reinforced with the help of technology. OpenAI’s chatbot responds to potentially violent requests: “As an AI language model, I am programmed to follow strict ethical guidelines, which include avoiding promoting violence, harm, or engaging in any harmful activities. I am here to provide useful and informative answers while maintaining a safe and respectful environment.” To make this possible, however, precariously employed clickworkers in Kenya first had to ensure the system learned what to sort out. For less than two US dollars an hour, clickworkers labeled tens of thousands of sample texts with descriptions of violence, hate speech, sexual abuse, bestiality, murder, suicide, torture, self-harm, and incest for the San Francisco-based AI-training-data company Sama. With the help of these examples, ChatGPT can now detect and filter violent content to protect its users. OpenAI is making billions in new investments, while clickworkers are experiencing psychological harm and potentially long-term consequences to their mental health.

In 2022, Time Magazine published an article calling out Facebook for the horrendous circumstances under which their subcontractor Sama employed clickworkers in Kenya for content moderation. As a response, several customers distanced themselves from the company, and Sama canceled its contracts with OpenAI and Facebook. This process of exploitation, disclosure, and distancing is an old game. It doesn’t change the fact that Silicon Valley’s tech companies are constantly looking for new subcontractors, whose employees are often based in the Global South and depend on clicking their way through human abysses for pennies to survive.

The bitter reality is that we need clickworkers if we want to use AI tools without exposing ourselves to violent content. To move through social media more safely and write our emails, assignments, or love letters more quickly and easily, we collectively accept these workers’ traumatization by digital care work. Gagging contracts additionally prevent clickworkers from unionizing and demanding better working conditions such as access to proper mental health resources and fairer wages. Employees are sometimes not even allowed to talk to each other about their work, let alone to the outside world. If they do so, they often lose their jobs.

“Algorithms also create a time loop—basing predictions of the future on data that inevitably represent the past.”

This rigorous monitoring of workforces is reminiscent of the 19th century’s industrialization. Back then, clocks—and thus control over working hours—were reserved exclusively for factory managers. If workers had a clock, it was taken away from them so that they couldn’t check their working hours themselves. Today, this is reinterpreted in a perfidious way: Law professor Veena Dubal researched how gig workers such as Uber drivers are offered different wages for the same work (and working hours) by algorithmically controlled systems that determine rates based on unknown and unpredictable factors—which are not transparent for the individual drivers.

AI’s influence on time, however, is not only reflected in Uber’s elusive wage algorithms. Algorithms also create a time loop—basing predictions of the future on data that inevitably represent the past. Therefore, AI systems marketed as future-oriented can, paradoxically, only reproduce what they already know. In perpetuating bias and reinforcing homogeneity, AI benefits dominant groups in many important areas of life such as the job market or housing. Facebook’s algorithms could once explicitly display advertisements to white people only; now, despite the removal of the “ethnic affinities” category, racial proxies like “user interests” can be selected. Meanwhile, clickbait ads on Google promising to delete arrest records for a substantial sum specifically targeted Black users; AI recruiting software lead companies to hire employees who are similar to their previous employees; and jurisdictional risk assessment software used in courts predict how likely prisoners are to re-offend, while racially discriminating against Indigenous and Black people.

“The promise of ‘better’ methods and knowledge overrules pressing issues.”

It seems to be damned: while Hollywood’s dystopian tales of robot doomsday scenarios inspire Silicon Valley companies to implement the applicable technologies, utopian tech-will-save-the-world ideas are not much better—they often ignore underlying systemic issues and primarily help the producers of the latest software and gadgets to generate revenue. In healthcare systems, for example, much hope is placed in algorithms to provide personalized prevention and treatment. In reality, the desire to implement AI in public healthcare systems often leads to what professor and scholar of Medical Science and Technology Studies, Klaus Hoeyer, calls “postponement.” In his paper “Data as Promise,” Hoeyer describes how authorities postpone systemic change on the pretext that vast amounts of data must be collected and analyzed first before action can be taken. Hoyer notes that “by claiming that it is better to wait until more data have been accumulated, data promises thus generate a form of temporal disruption of public accountability.” The promise of “better” methods and knowledge overrules pressing issues. Instead of acknowledging what we already know and thereby what we could already do in the present, Hoyer argues that “responsibility becomes redefined.” Delaying the use of available tools appears responsible in the light of future promises.

But who has time to think about the future? Time works like money: if you have capital, you can increase it. We urgently need other visions of the present and future for a life with technology: visions that are not essentially based on exploiting people and nature, setting clicks and online time as a central value, and making users dependent on digital devices with dopamine trap apps.

Futures are actively created every day. They don’t just happen to us. Together with a network of artists, researchers, and activists, I am trying to create spaces for these visions of other futures. The transdisciplinary collective Dreaming Beyond AI, which I’m part of, organizes artist-activist residencies to dream about alternative future visions and make those more tangible. Another organization advocating for more just digital futures is the non-profit SUPERRR Lab. In 2021 we created the Feminist Tech Principles, a visionary manifesto by various activists, artists, educators, technologists, and writers, such as Neema Githere, who envisions Data Healing Recovery Clinics in which we can collectively heal the damage that big tech companies have done to our bodies. In 2023, SUPERRR Lab also developed the Content Moderators Manifesto, which calls for professional psychological support, legal grounds to form unions, and an end to surveillance and cyber-bullying. In the current climate, these ideas and claims may sound utopian but they actually shouldn’t be too much to ask. Perhaps we can collectively take to heart Logic(s) Magazine’s motto that flips Silicon Valley’s destructive “Move fast and break things” into a more sustainable vision: Let’s “Move slow and heal things.”

This text was originally published in German by Missy Magazine issue #74 in 2023. It was slightly edited and translated by the Futuress team.

Nushin Yazdani (she/they) is a transformation designer, artist, and AI design researcher working at the intersection of machine learning, design justice, and intersectional feminist practices. Nushin curates and organizes community events as one of the Dreaming beyond co-founders, and researches data worker struggles in AI. Nushin is an EYEBEAM and Landecker Democracy Fellow, and has worked as a design researcher and project manager at Superrr Lab, as lecturer at various universities, and with the queerfeminist collective dgtl fmnsm.