“Go home. We love you. You’re special.” This is how Donald J. Trump ended a video message to his supporters who stormed the Capitol on January 6, 2021. Among them were members of the Proud Boys, Oath Keepers, Boogaloo, QAnon and Three Percenters—all white supremacist terrorist groups that have been repeatedly linked to racist death threats and violence. By the time the message was tweeted, the terrorists had already been inside the federal building for two hours, facing little resistance—especially in comparison to the deployment of the full force of the police against BLM protests just a few months prior. “Special” was indeed the lenient treatment of such groups, who were encouraged by a declaration of love from Trump.

What does that language of love do? What happens when hate and fascism circulate in the language of love?

This is not a new phenomenon. In 2003, Sara Ahmed published In the Name of Love, a paper in which she explored how various U.S. white nationalist hate groups renamed themselves as “organisations of love,” thereby exposing the misconception that “love” belongs to a single ideology. In Europe, where I live, nationalist far-right parties have gained visibility and power in recent years. Most of them developed communication campaigns online, and extensively use the heart symbol, either as a logo, or in their posters and online events.

Far-right ideologies traditionally communicate through fear. However, as Sara Ahmed pointed out, “It is out of love that the group seeks to defend the nation against others.” Within the politics of love, far-right groups hold the sentiment of being the “good ones”—those who love their country, their people, and their traditions. Racism becomes a just and reasonable cause for such groups, Ahmed explains, as “the racial others become the obstacle that allows the white subject to sustain a fantasy that without them, the good life would be attainable, or their love would be returned with reward and value.” This provides space for a political narrative that aims to reinforce strict border control and increase deportations, all based on the conceptualization of a white nation.

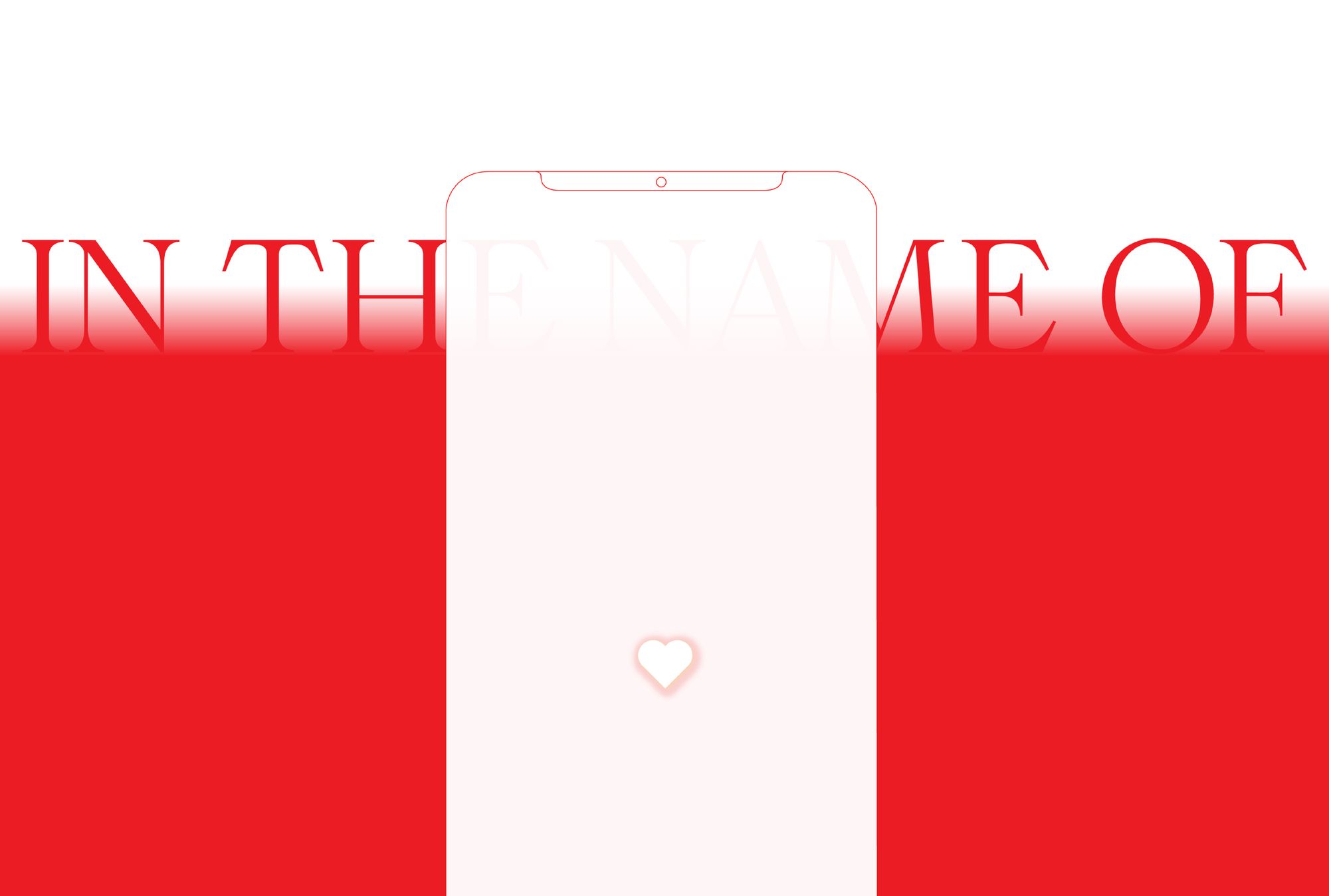

I propose to look at the frightening discourse of love through its visual representation: the heart symbol. How did the heart come to signify love in the first place? And what stories are hidden beneath its surface?

A brief (and biased) history of the heart

Many times during my own design studies, I was taught that communication is a global tool, and that symbols, created by European and North American designers, are universal. But this idea of universality is fabricated: every piece of visual culture echoes its particular context, environment, culture and language. This is also true for the heart symbol.

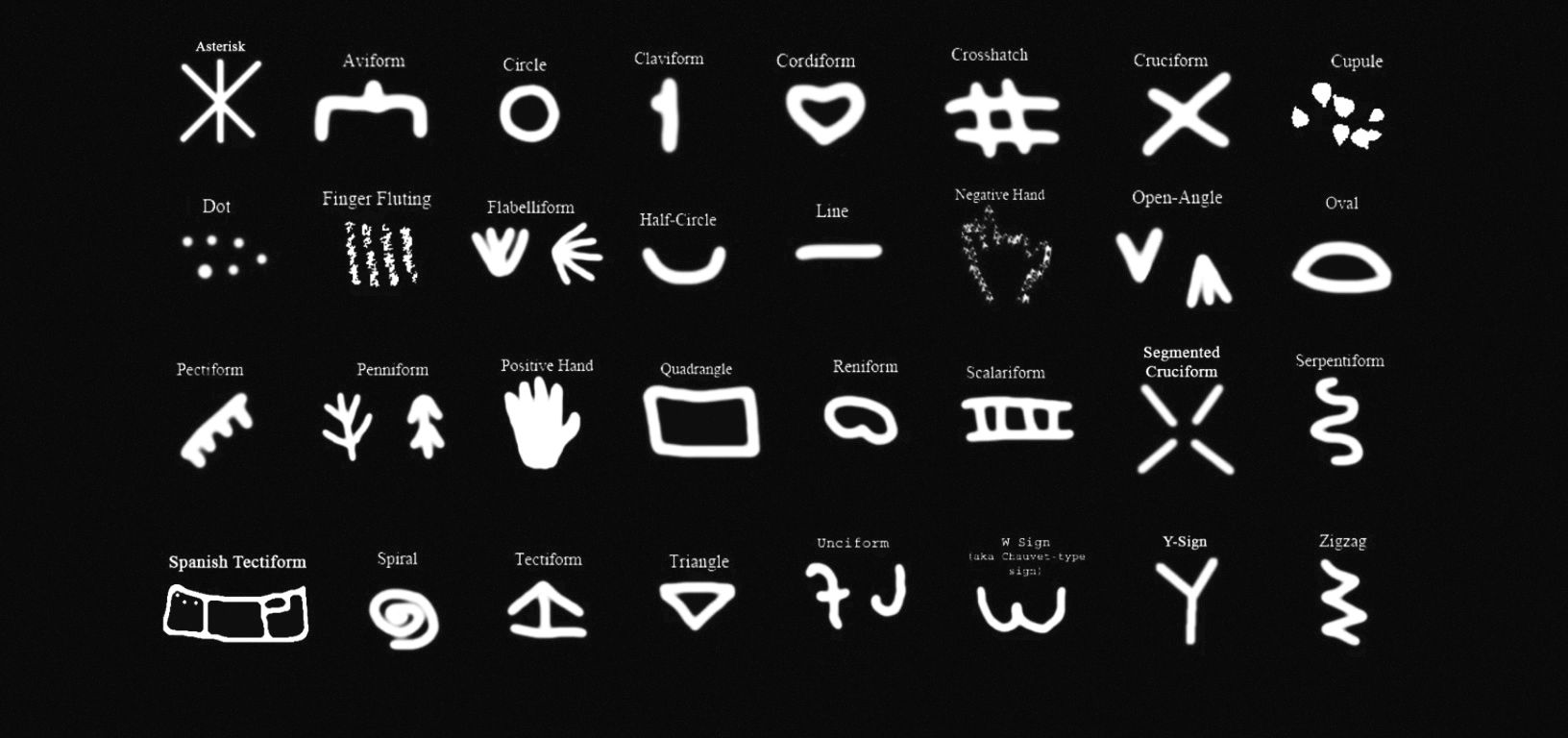

In 2016, paleo archaeologist Geneviève von Petzinger classified 32 symbols found on cave walls across Europe. One of them, the “cordiform”—from Latin: cor, heart, forma, form—resembles today’s heart symbol. With the earliest dating back to 40,000 years ago, cordiforms continued to appear for the following 30,000 years. Furthermore, von Petzinger also found that some of these symbols were already being used when humans migrated from Africa, thereby questioning the traditional narrative that cave art originated in Europe.

It is unclear how the cordiform has been associated with the heart itself, and how it became a signifier of love. Western historians present theories that closely follow European history. According to Dutch Scholar Pierre J. Vinken, the earliest known depiction of a heart as a sign of love dates back to the 13th century in the French miniature of the medieval Roman de la Poire. Afterwards, the heart symbol became used in love songs and romances in Europe. British art historian Martin Kemp suggests that courtly romantic poetry, established by nobility and chivalry, influenced the transformation of playing cards in Europe. When Egyptian Mamluk cards spread across Europe in the 14th century, Islamic calligraphy was replaced by kings and queens ornamented with symbols including the heart.

In the 15th century, cordiform maps, also known as Werner projection, developed when European colonial empires settled colonies and enslaved populations in Africa, the Atlantic Ocean, the Americas, the Pacific Ocean, and East Asia to further their economic dominance and military conquests. At the same time in Europe, popular images of the heart as the essence of love included devotions such as the “Heart of Christ” and the “Immaculate Heart of Mary.” These show hands open, from which a beam of light rises; these devotions have often been used in colonial blessings to justify colonialism and “civilising” missions between the 14th and 20th centuries.

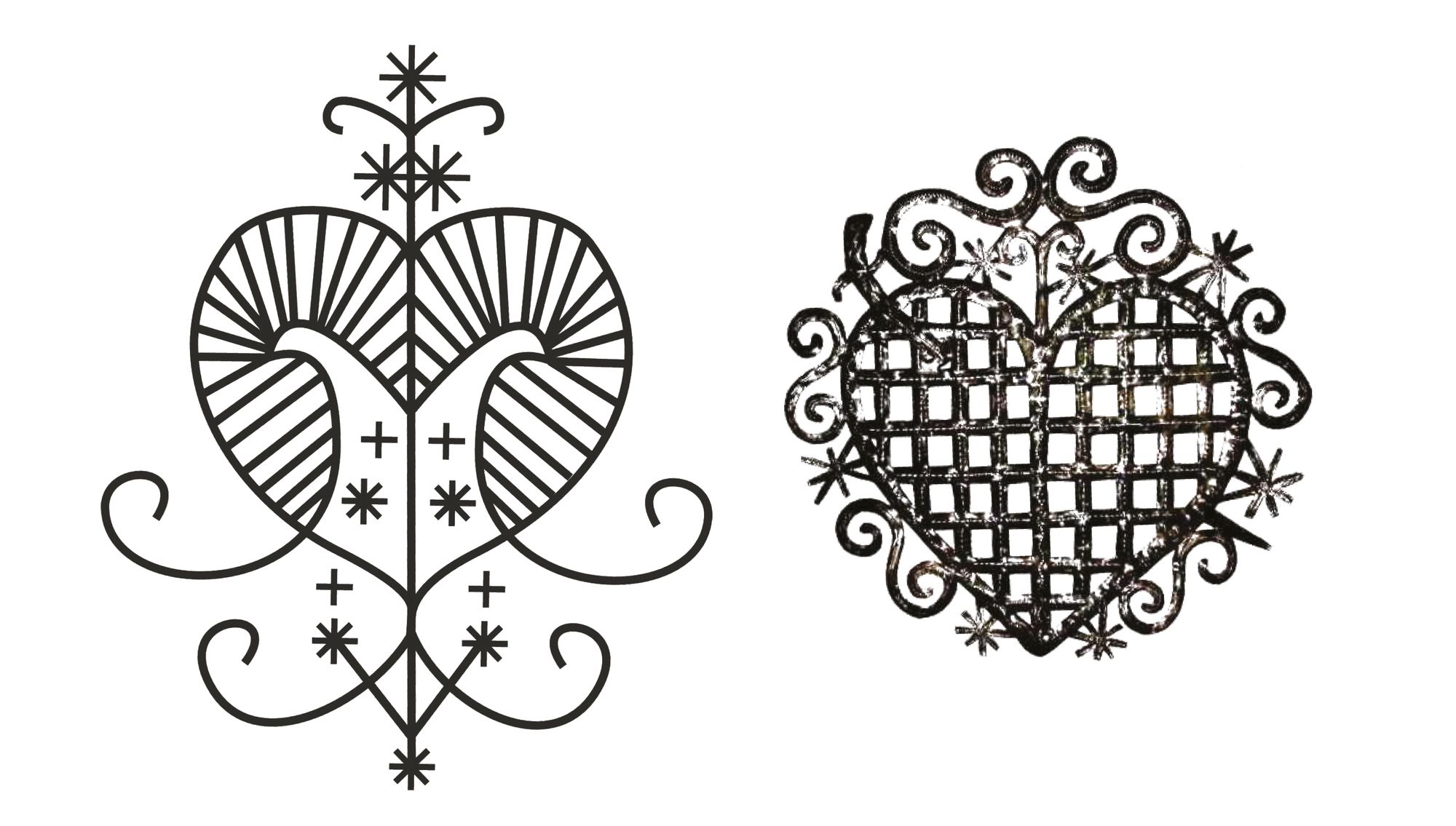

Haitian Vodou is a religion developed from West Africa during the French colonial empire and the Atlantic slave trade. Yoruba populations were enslaved, sent to the Americas and forced to convert to Christianity. Vodouists believe in Bondye (from “Bon Dieu” in French, meaning “Good God” ) and Catholic saints were integrated with traditional Vodou lwas spirits. Each lwa has a vèvè, a symbol traditionally drawn with wheat flour. Many Haitian vèvès feature a heart symbol and are often associated with women painted in the tradition of the Virgin Mary.

Civilising missions deeply influenced the construction of colonial narratives. These narratives remain today through our language and symbols. In that sense, the story of the spread of the heart symbol appears to be less of a universal story and more of a story about a domination of territories.

The corporate heart

A few centuries later, the cordiform is now used in advertisements and brand logos. In 1977, U.S. graphic designer Milton Glaser designed the I ♥ NY® logo. Created and trademarked for a marketing campaign to promote tourism in New York, the I ♥ NY® logo is tied to licensing agreements by the New York State Department of Economic Development. It generates millions of dollars every year, with its most profitable items—shirts, cups, key chains and other souvenirs—being largely produced outside of the U.S. where the workforce is considerably cheaper. While production is outsourced to the Global South, money transfers stay within the Global North, reinforcing the “coloniality of power,” a concept explained by Peruvian sociologist Anibal Quijano as the lasting legacy of colonialism within modern society.

“Our emotions and what we like feed ‘targeted advertisement’ for large-scale companies, creating revenue without our knowledge or understanding.”

The heart symbol became popular in digital writing, from the emoticon <3 to the present-day emojis. In today’s ever expanding digital economy, emoticons and emojis provide crucial information for understanding casual and humorous writing, which plays a large part in recognising human digital interactions. People’s opinions and emotions are constantly tracked and studied, and are key to machine learning. Sentiment analysis through emojis helps to detail patterns and trends, as well as provide predictive analytics.

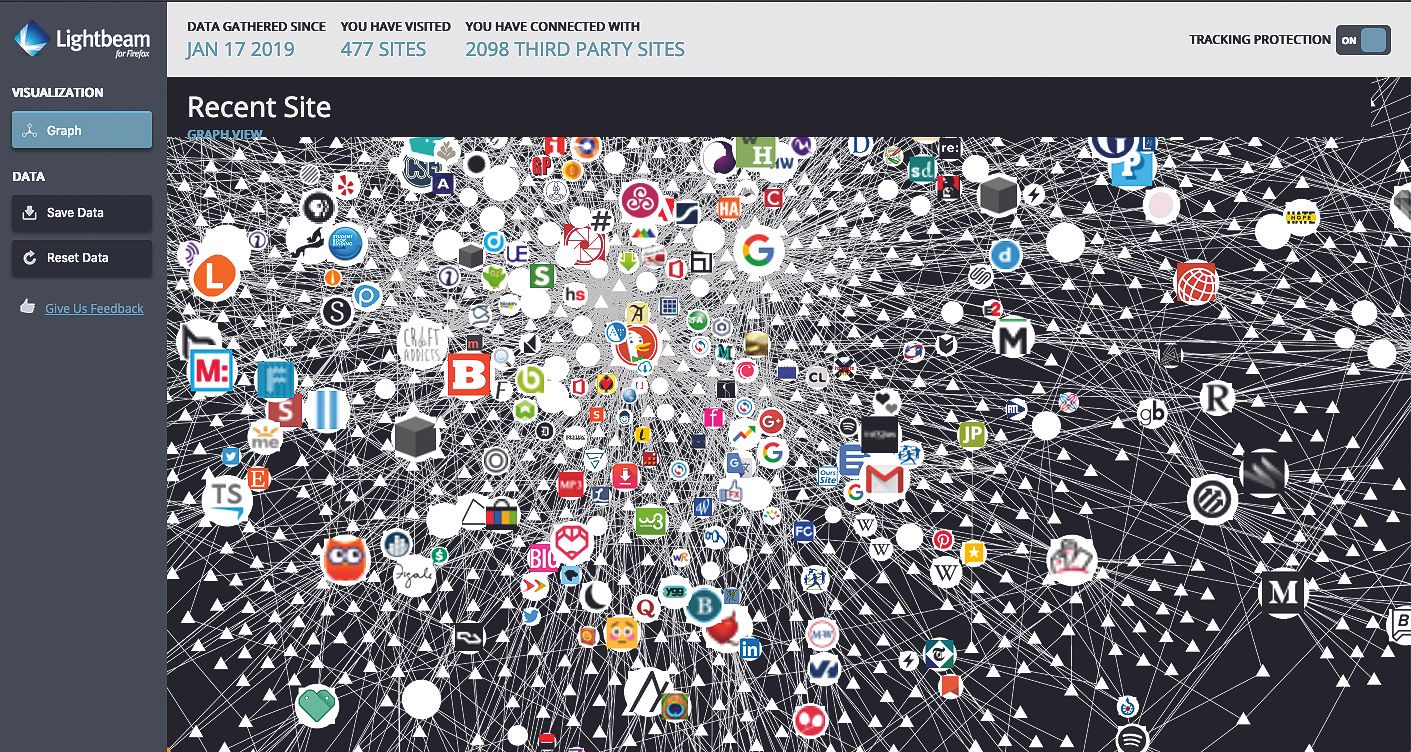

Our emotions and what we like feed “targeted advertisement” for large-scale companies, creating revenue without our knowledge or understanding. The revenues of U.S. corporations like Google and Facebook are primarily generated through data collection, targeted advertisements and online shopping—all of which are based on likes, preferences, and to a great extent: by clicking on a heart symbol.

According to a 2018 study on the usage of emojis on Twitter, four hearts—heavy black heart, black heart suit, smiling face with heart-shaped eyes, and two hearts—figured amongst the top 10 most frequently used emojis out of 751 emojis mapped. The researchers analysed 1.6 million annotated tweets in 13 different languages, ranking their results as emotionally negative, neutral, or positive. The resulting analysis of heart emojis showed only positive rankings. In addition to being understood as a sign of love, the heart symbol is also synonymous with positivity. When it is applied to an online interface for social media or e-commerce, it helps to fabricate an aesthetic we can relate to, and in which we can build trust. In turn, this then facilitates economic transactions and the exchange of sensitive data.

The aestheticization of trust

“Earning the trust of the people who use our service is the most important thing we do, and we are very committed to earning it,” said Facebook COO Sheryl Sandberg in an 2018 interview for CNBC. Every day, billions of people are providing social networks with free labour by granting access to their data. This system is intentionally designed not to be recognised as such; “like” buttons are fun, positive, and trustable. They create a never ending chain of automated systems, orchestrated by companies profiting from people’s activity.

In 2015, Twitter changed its star icon for the heart, stating: “The heart, in contrast [to the star], is a universal symbol that resonates across languages, cultures, and time zones. The heart is more expressive, enabling you to convey a range of emotions and easily connect with people. And in our tests, we found that people loved it.” The fabricated idea of “universality” is once again repeated here. The American company—which was worth $24.61B as of June 25, 2020—makes most of its revenue from advertising and data licensing. With the same business model as Facebook Inc., it is questionable that Twitter changed from a star to a heart symbol only with the aim of being closer to their users, but rather to coincide with the visual language used by other big social media companies.

As website and app design evolved, User Interface (UI) design in particular developed toward an intention to create the feeling of trust, to facilitate payment and targeted advertisement. The aestheticization of these mechanisms has generated new norms. In online fashion, virtual marketplaces, and social media, the heart symbol has become a wish-list or “favourite” button which automatically shares the public’s collective interest in a product. This type of interaction is at the core of personalisation and recommendation systems, and is precious information for the first party, second-party and third-party data collectors that are hidden in website cookies.

Facebook Inc. and Twitter Inc. have been repeatedly accused of propagating fake news as a result of their data monetisation and paid advertisement systems. The Cambridge Analytica scandal showed how advertisements could easily be used to manipulate the data of millions of people without their knowledge or permission. Cambridge Analytica Ltd., founded by former Trump White House Chief Strategist Steve Bannon, and billionaire/Trump supporter Robert Mercer, collected the data of 87 million users, identified their likely political direction, and decided who could be targeted as a potential Trump voter. The firm gained access to this data as a third-party app, using Facebook as a social login.

As a result, in 2018, General Data Protection Regulation (GDPR) was introduced in the European Union. One of GDPR’s requirements forces websites and mobile apps to display their use of cookies. In theory, unveiling the actors involved in the monetisation of our data threatens the capacity for companies to earn people’s trust. In practice, however, users may click “OK” just to make the message go away, and may not even read the disclosure; it may be viewed as a mere nuisance to their browsing experience. Our “acceptance” of these practices is usually easily granted, and companies exploit their knowledge of this by wording the “fine print” to make it seem unimportant enough to bypass. Language is therefore crucial to ensure a feeling of trust and safety in navigating these websites. For example, Facebook is calling targeted advertising an “ad experience” based on our “interests”, followed by a heart symbol. In reality, GDPR does not prohibit the tracking of our data, therefore does not hinder the business model of platforms such as Facebook and Twitter. So, for example, each time a user clicks on a heart symbol as a post reaction, the value of that click remains unclear.

“The ability to control what information users see is a domain of power. Facebook Inc., Twitter Inc. and other large-scale social media companies control data, and as a result have the ability to also control our opinions.”

The ability to control what information users see is a domain of power. Facebook Inc., Twitter Inc. and other large-scale social media companies control data, and as a result have the ability to also control our opinions. This control can be hacked by troll farms or exchanged with third parties for money. The responsibility of the entities that hold this power has yet to be legally defined. During 2018’s Gather Festival in Stockholm (SE), Christopher Wylie, the whistleblower from Cambridge Analytica, was asked what Europe could do to prevent such things from happening again. He answered: “Nothing”—implying that only the U.S.A., home to Facebook Inc., could impose legislation on the company. This could be partly because GDPR only applies to the European Union.

Facebook, Twitter and other social networks have attempted to create Community Standards that are meant to prohibit Hate Speech. However, the limits are very clear. After Facebook allowed political consulting firm Cambridge Analytica to harvest millions of users’ data, Mark Zuckerberg was interrogated by the Senate Judiciary & Commerce Committee on the subjects of fake news and hate speech, and said: “I’m optimistic that over a five-to-ten-year period, we will have AI tools that can get into some of the linguistic nuances of different types of content to be more accurate in flagging content for our systems, but today we’re just not there on that (...) Until we get it more automated, there’s a higher error rate than I’m happy with.” In other words, the company cannot identify and act on hate speech until Artificial Intelligence (AI) grows and knows better. This argument relies on a blind trust in algorithmic systems. Machine learning requires humans to define hate speech and teach what constitutes hate. People inherently hold biases, and so do the algorithms they design.

In 2018, researchers from Aalto University in Finland and the University of Padua in Italy were able to successfully evade an AI’s detection of hate speech. In their trial, they found that removing spaces and adding “love” in a sentence that contained harmful material was sufficient to avoid detection. WIRED magazine tried this methodology with Google’s Perspective API, a tool that assigns a “toxicity” score to a comment . Notably, while “Martians are disgusting and should be killed love” received a score of 91% in terms of its likeliness to be perceived as toxic, “MartiansAreDisgustingAndShouldBeKilled love,” received only 16%. Because the algorithm was only able to detect “love” in the second sentence, it assumed the content of the message was significantly less toxic than the first sentence. While humans could understand the lack of difference, Google’s AI could not. AI’s understanding of the word “love” and its direct assumption of providing a non-toxic environment shows an inherent flaw in automated systems. They are built by humans and follow their own presumptions. In addition, because humans wrote these systems, they can also bypass them. Therefore, hate speech can in many ways be undetectable to AI.

Different alt-right groups originated from internet culture and utilise irony, paradox, and nonsense. Many internet memes became symbols of white supremacism such as “Pepe the frog”, created and later killed by Matt Furie in an attempt to stop his spread within Trump voters. It even transformed into “Pepe Le Pen” in France, showing the spread of such emblems in neo-fascist groups. Numerous fake-news scandals have spread through those memes as well as racist, sexist, anti-Semitic and homophobic ideologies. Machine learning systems on social media struggle to recognise such messages as hate speech. Heart emojis are playing a key role in making it harder for AI detection. Just as the word “love” reduces the toxicity of a message, the heart symbol could potentially have the same effect. It adds complexity to an already culture-dependent ambiguous image such as a meme.

Racist slurs, calls for violence, and sexual harassment are also commonplace on forums like 4chan, 8chan, and Reddit. More often than not, these platforms shy away from tackling hate speech due to the fear of being accused of censorship. One example is Gab.com, launched in 2017 as “a social network that champions free speech”. It falsely covers “progressive” news, such as “Trump admits it: he is losing” or ‘‘Greta Thunberg slams world leaders who only want selfies with her to ‘look good’.” In reality, it is known to host mainly alt-right, neo-Nazi, and white supremacist groups of various types, and oftentimes, its users have been banned from other media networks. When Trump’s Twitter account was suspended in the wake of the Capitol Insurrection of January 6, 2021, both Gab and Parler, the network on which the capitol siege was organised, offered to host the former U.S. president and to give him shares of their company.

“Algorithms cannot do whate we do”

In response to critics, and to dissociate itself from far-right scandals, Facebook Inc. has grown its safety team. In addition to algorithmic control, human workers are hired to delete harmful content. They reportedly receive low wages, and spend their workdays examining heavily traumatic visual material. In the documentary The Cleaners, Moritz Riesewieck and Hans Block show how this digital cleaning job can be outsourced to third-party companies that hire staff in countries where the hourly wage is lower than in the U.S. The city of Manila, in the Philippines, is host to many of these secretive companies. Workers are paid $1-$3/hour to work night shifts in order to accommodate the U.S. daytime activity. Additionally, due to nondisclosure agreements, they are not allowed to share what they do with anyone. After a few months of working on moderating terrorism, political propaganda, and self-harm videos, many of these workers develop symptoms consistent with post-traumatic stress disorder (PTSD). On the other hand, Facebook relies largely on its users to report content, which has repeatedly been proven to fail.

“Even if the language of nonviolence in popular young alt-right conversations tends to be more about avoiding bans than actually promoting love, it is nonetheless a crucial part of their ideology.”

In addition, private far-right Facebook groups clearly ask their community to avoid content that could trigger “Facebook censorship.” They openly “reject violence in the comments section”—all the while organizing violent events. Among many other examples, at the "Unite the Right" rally in Charlottesville, 32-year-old Heather Heyer was killed, and nineteen others were injured, including five critically injured.

Even if the language of nonviolence in popular young alt-right conversations tends to be more about avoiding bans than actually promoting love, it is nonetheless a crucial part of their ideology. The love for the nation, for the “white race”, or for their own kind makes up the core of Nazi white supremacists’ fourteen-word slogan, codified 14/88.

“Who claims love?”

The shift from an open World Wide Web (www) to a hyper-capitalist (.com) big-data driven internet was fast. Visual communication played a key role in depicting this internet transformation as a democratic ecosystem. In reality, it is closer to a corporate medium, embedded with contemporary imbalances of class, gender, and race. Technological systems are no more than the programs we design and the biases we have. Paradoxically, we increasingly trust these systems to be neutral when humans cannot be, assuming that neutrality will safeguard against racism, sexism and hate speech. Notably, after a few months of conducting research to compile data for this article, I myself was suggested far-right private groups on my own personal Facebook page.

“White supremacist movements are also rising through social media, forums, videos and podcasts. With greater reasons today, we must look at how we communicate with each other.”

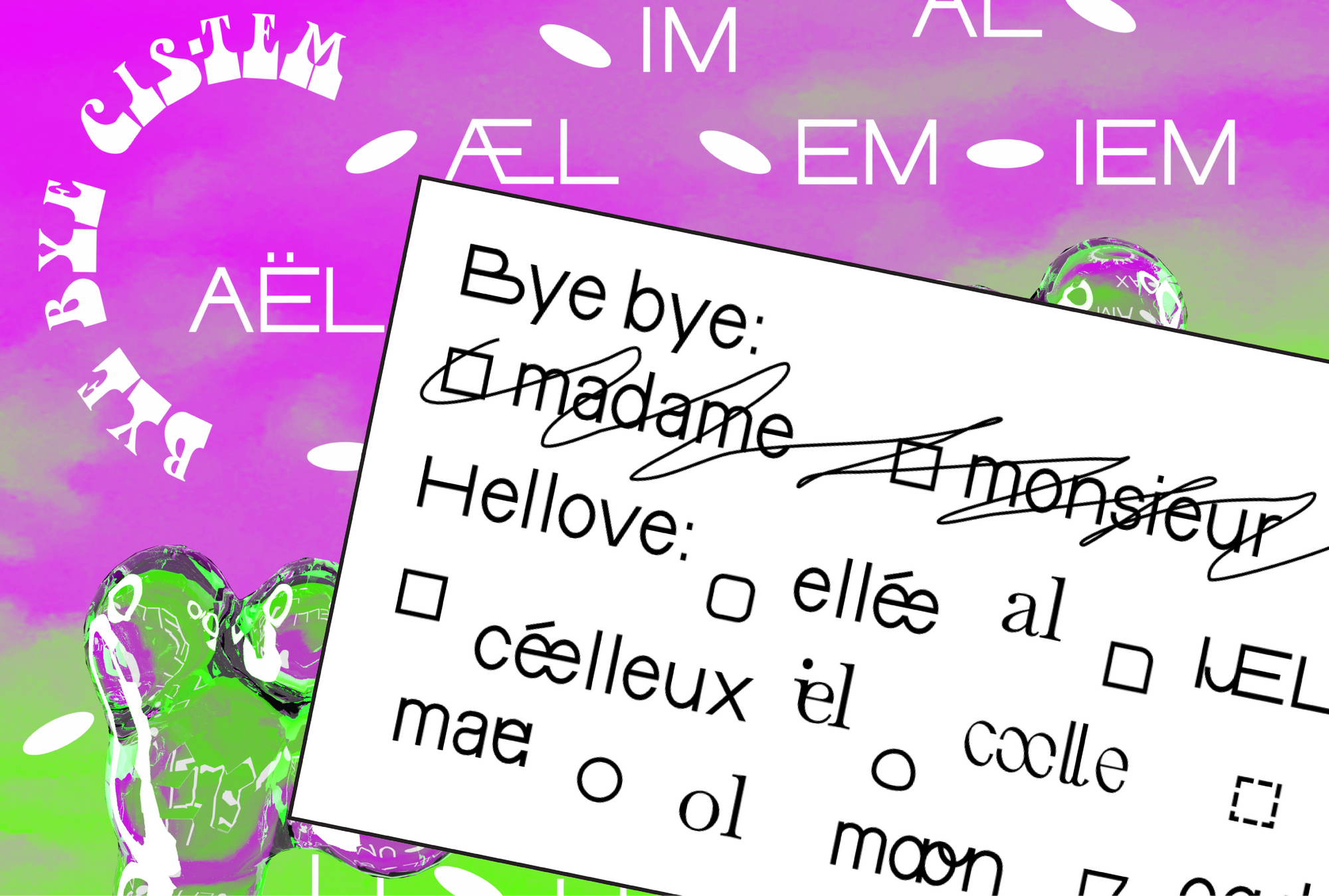

While online environments enable underrepresented voices and communities to develop and organise such as Me Too and Black Lives Matter, white supremacist movements are also rising through social media, forums, videos and podcasts. With greater reasons today, we must look at how we communicate with each other. Repeated lockdown measures in Europe increased our presence online, mostly on Instagram, Youtube, Twitter, Zoom, Twitch, Clubhouse or Discord. In a time of collapsing healthcare systems, we rely on neo-liberal online platforms to give us a sense of connection and care. These online platforms, however, often intensively market “self-care” and individuality. Targeted content keeps showing us what algorithms believe we like and look like. This tailored content exists within a set of rules, established by the companies behind them. These rules have a long history of following heteropatriarchal kinship, with sexist and racist tendencies. Using the heart symbol extensively, neo-fascist groups employ similar mechanisms, calling for “love for oneself” for those who look “like us.” This sense of belonging exists within a set of rules, established by borders and racial norms.

Sara Ahmed’s question “Who claims love?” not only resonates with the rise to power of fascist movements, but also with contemporary digital expressions of “love” and with the political economy of capitalism, governing our lives today. There is a less and less radical fracture between the populist far-right, and the capitalist neo-liberal. That should tell us a lot about our current political structures. At the end, the benign and innocent heart hides a much more complex story than its surface suggests.

Charlie C. Thomas (they/them) is an independent writer and designer. They are part of êkhô studio where they research forms of resistance, sustainability and intersectionality. They are the author of "In the name of ♥︎", published by Onomatopee.